Our Research Areas

20 MAY, 2020

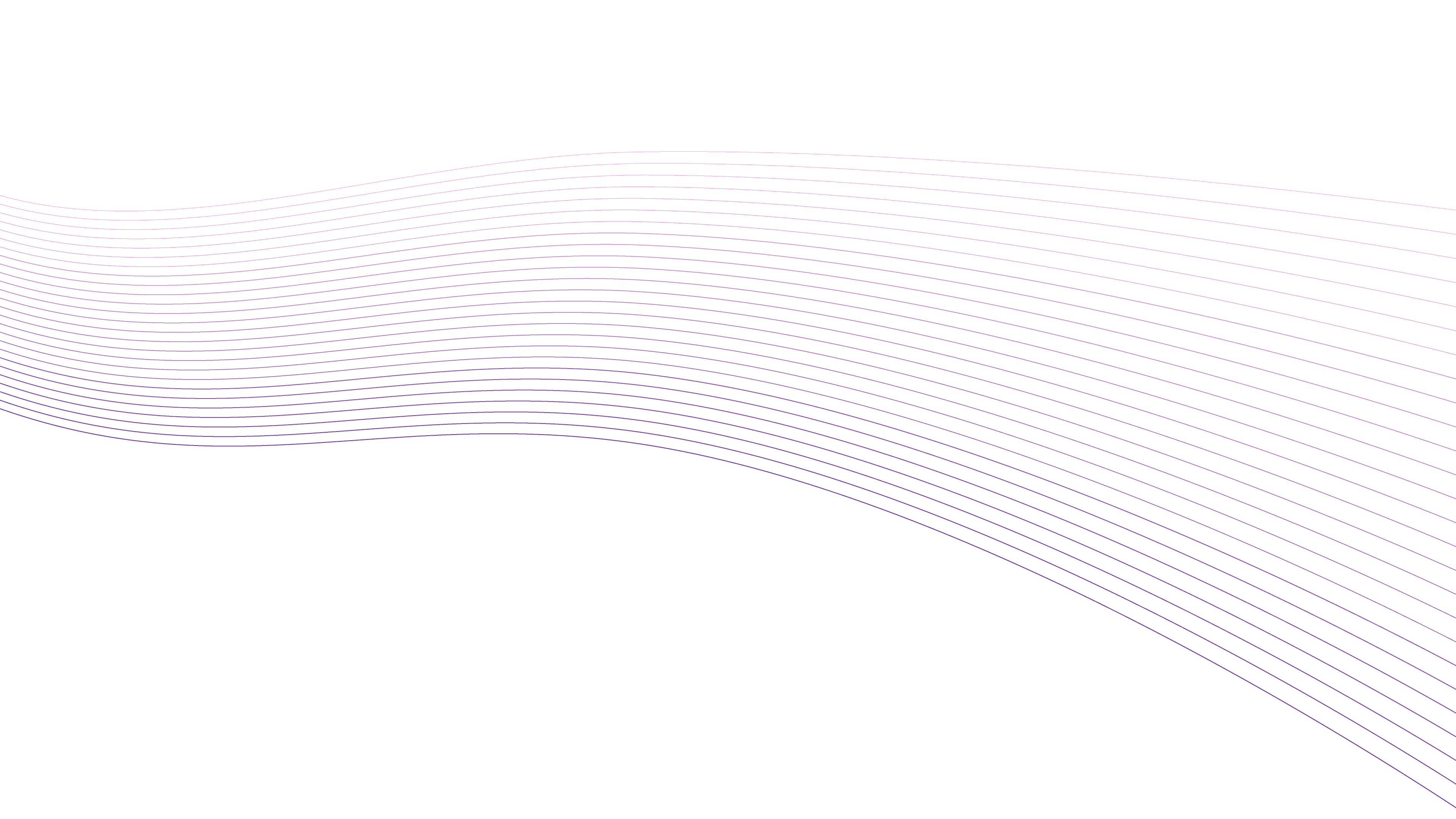

Examining the State-of-the-Art in News Timeline Summarization

In this paper, we compare different TLS strategies using appropriate evaluation frameworks, and propose a simple and effective combination of methods that improves over the state-of-the-art on all tested benchmarks. For a more robust evaluation, we also present a new TLS dataset, which is larger and spans longer time periods than previous datasets.

20 MAY, 2020

A Large-Scale Multi-Document Summarization Dataset from the Wikipedia Current Events Portal

Multi-document summarization (MDS) aims to compress the content in large document collections into short summaries and has important applications in story clustering for newsfeeds, presentation of search results, and timeline generation.

1 FEB, 2019

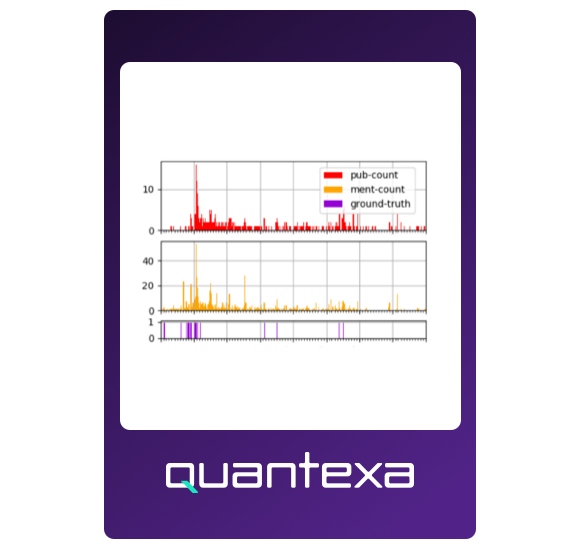

Latent Multi-task Architecture Learning

We present experiments on synthetic data and data from OntoNotes 5.0, including four different tasks and seven different domains. Our extension consistently outperforms previous approaches to learning latent architectures for multi-task problems and achieves up to 15% average error reductions over common approaches to MTL.

-

Research

Research01 Apr, 2021

Adventures in Multi-Document Summarization: The Wikipedia Current Events Portal Dataset

Demian Gholipour

13 Min Read

-

Data Science

Data Science30 Jul, 2020

NEWS API: A Starter Guide for Python Users

Eoin Kilbride

6 Min Read

-

Data Science

Data Science22 Sep, 2016

A Hierarchical Model of Reviews for Aspect-based Sentiment Analysis

Sebastian Ruder

7 Min Read

-

General

General01 Oct, 2018

A Review of the Neural History of Natural Language Processing

Sebastian Ruder

9 Min Read

-

Data Science

Data Science24 Aug, 2016

An introduction to Generative Adversarial Networks (with code in TensorFlow)

John Glover

12 Min Read

-

Research

Research13 Oct, 2016

An overview of word embeddings and their connection to distributional semantic models

Sebastian Ruder

6 Min Read